The artificial intelligence (AI) revolution is reshaping global demand for digital infrastructure. Hyperscale data centers—powering machine learning, cloud services, and inference workloads—consume unprecedented levels of energy. And as graphics processing unit (GPU) density and computational intensity rise, traditional infrastructure models need to be provided with the right speed and scale to meet the challenge.

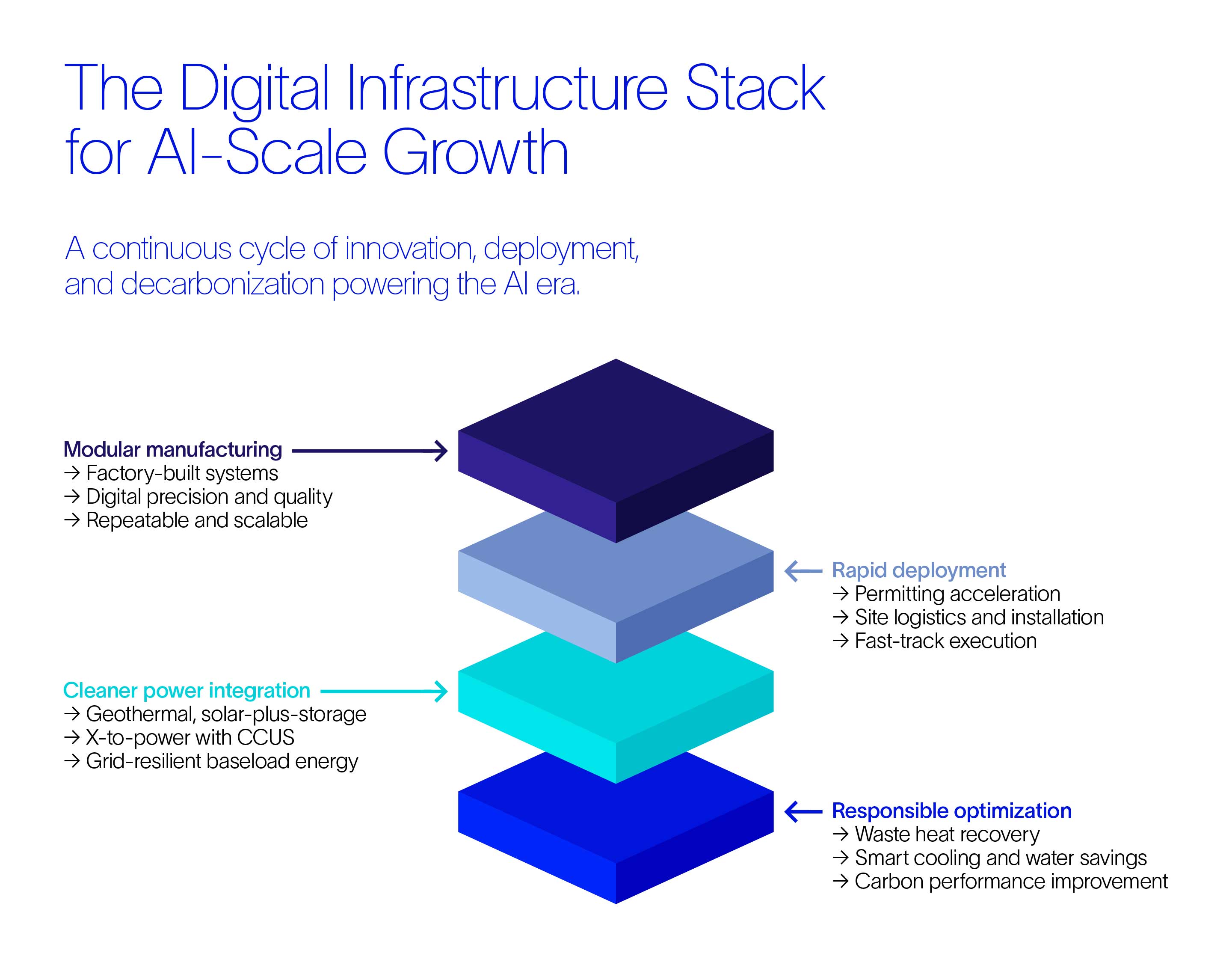

This transformation requires more than engineering innovation. It demands a new model of infrastructure delivery; one that integrates modular manufacturing, clean and baseload energy systems, and policy frameworks capable of matching AI’s exponential pace. That last piece is especially important because, as we’ve seen with initiatives like Winning the Race: America’s AI Action Plan, government support can play a critical role in removing structural barriers to digital growth.

The modular imperative

A critical component of tech deployment is shifting to more modular infrastructure to reach the speed, reliability, and scale required by AI-era builds. This allows for both the necessary infrastructure to be built and the introduction of advanced tech, such as carbon capture, all while reshaping what once took years to now be executed in a fraction of the time. For example, factory-built modular systems for building infrastructure can cut deployment times by 30%–50%, thereby reducing construction risk and expediting time-to-operation.

In terms of consistency, digital fabrication ensures precision and quality across each build cycle. In some cases, modular switchrooms have reduced onsite labor by 84% and install time by 75%. Meanwhile, standardized, repeatable components make it easier to deploy across multiple sites, with 93% of “hyperscaler operators” (e.g., Amazon Web Services) currently planning to implement modular strategies at scale.

What’s the next step, you might ask? Well, as demands continue to grow, the next step is to integrate these capabilities with secure access to cleaner, dispatchable energy.

Designing infrastructure for training vs. inference AI

Not all data centers serve the same function. AI workloads come in two core types—each with unique infrastructure requirements:

- Training—These centers are located remotely, require massive energy input, and are optimized for computational density and cooling efficiency.

- Inference—Typically closer to urban users, these facilities demand low latency, grid resilience, and compact, low-power usage effectiveness (PUE) configurations.

Modular and prefabricated platforms can be customized to both—enabling repeatable, location-specific design and performance. Not to mention that integrated modular cooling is becoming essential to support AI-grade GPUs without compromising sustainability goals.

High-performance computational environments produce intense heat, putting new pressure on traditional cooling systems—particularly water-based ones. Operators are addressing this challenge through both advanced cooling tech (e.g., liquid immersion and phase-change systems) and hybrid closed-loop cooling systems. The former improves thermal transfer and cuts water usage; the latter delivers high-efficiency thermal performance while simultaneously minimizing water dependency.

Policy acceleration: The AI infrastructure opportunity

Public policy can be a bottleneck or a breakthrough. Recent moves—such as the Winning the Race AI Action Plan in the United States—illustrate how new policies can accelerate critical infrastructure thanks to factors such as streamlined permitting and grid readiness.

According to the US action plan, streamlined planning refers to new categorical exclusions under the National Environmental Policy Act (NEPA) and aligned reviews under the Clean Air and Water Acts that help eliminate long-standing regulatory delays. Meanwhile, grid readiness refers to the plan’s explicit call for developing “a grid to match the pace of AI innovation,” which includes preventing early retirement of critical power assets and modernizing transmission for clean energy distribution.

These examples underscore the role of agile policy in building with clarity, certainty, and speed.

Scaling cleaner, more reliable energy with decarbonized X-to-power solutions

As demand rises, data centers must run on power that is not only clean but also constant and controllable. Emerging tech now enables:

- Flexible, dispatchable electricity from gas-to-power systems integrated with carbon capture—cutting life cycle emissions while maintaining reliability.

- Geothermal supply to deliver greener 24/7 baseload energy, particularly suited to inference centers near metropolitan zones.

- Solar-plus-storage for deployments in remote regions or areas with constrained grid infrastructure.

While metrics differ from source to source, the consensus is that today's large numbers define both the size of the challenge and that of the opportunity. While some mention the additional 186 gigawatts of power demanded by data centers in the US alone, others consider the projection of carbon capture-equipped facilities in 2040 to generate 900 terawatt hours, while avoiding 300 metric tons of carbon dioxide equivalent per year. Waste reduction can also contribute to the carbon savings of modern data centers, with waste heat strategies improving not only sustainability but also bottom-line performance when embedded from day one.

Today's novel designs can turn waste into an opportunity. While most traditional data centers waste more than 60% of input energy as heat, that expelled heat can be redirected to support district heating or secondary systems. Technologies like these that recover thermal energy can increase total system efficiency to 80% or more, with lower Scope 1 and 2 emissions as a result.

A new operating model rooted in collaboration

No single entity can deliver AI infrastructure alone, which makes ecosystem collaboration the only viable path to scale. For example, energy tech and providers are collaborating with data operators to move electrons efficiently across borders. This is a strategic shift by utilities and energy companies to develop and transmit electricity from emerging or previously underutilized regions that have access to cleaner energy sources.

Meanwhile, governments are crafting agile policies and incentive structures to attract accelerated infrastructure and buildout investment, while industrial alliances share modular standards and deployment strategies to accelerate co-location buildouts and drive volume efficiency gains.

All of these collaborations enable more cost control, scalability, and community alignment.

Futureproofing the growth of our digital infrastructure

To remain competitive, infrastructure must evolve alongside computational demands. That means modular retrofits (given their improved efficiency) will replace outdated systems and, therefore, add capacity quickly. In addition, flexible site design will support evolving chipsets and cooling profiles, while smarter energy management will continue to better align operations with carbon and price signals.

The future of AI infrastructure depends on eliminating deployment delays, securing cleaner and more reliable power, and optimizing performance at scale. Modular systems, decarbonized X-to-power generation, and forward-looking policy are not optional—they are foundational. The tech industry is fast paced by nature, and the ecosystem enabling its exponential growth requires orchestrating the full value chain from energy creation to infrastructure build and deployment. The goal? To operationalize these strategies into a repeatable, high-assurance delivery model that matches the speed of innovation.