In recent years, oil and gas companies have made significant strides in understanding their emissions footprints. Many have begun to pinpoint their largest contributors, track progress, and benchmark their performance against internal and external targets, as well as industry standards. It’s an approach that ensures transparency and accountability, which is critical for maintaining stakeholder trust and meeting compliance requirements (e.g., mandatory climate disclosures and emissions caps).

Forecasting complements these efforts by providing industry players with a forward-looking perspective. It enables them to anticipate future emissions trends based on business-as-usual scenarios or the implementation of new strategies—and possibly become more proactive in managing their business as a result. They can leverage these predictive insights to evaluate the effectiveness of planned decarbonization initiatives, for example, along with optimizing their resource allocation and identifying potential risks (e.g., the costs associated with a low-carbon model).

That said, when companies begin considering forecasting their emissions, they quickly realize what a multifaceted problem it is. They find that it involves multiple, interdependent variables affected by seasonality, abatement projects, market conditions, carbon tax, and other external factors. It’s also a multilevel problem given that the forecasting of emissions at the asset level (and sometimes at the field level) needs to be consistent with the forecasts at both country and corporate levels. There must also be a good level of confidence in the final forecast results to steer the business in the right direction and support climate goals—a level of confidence that cannot be achieved without high-quality data.

Poor or inaccurate inputs are known to result in flawed and unreliable outputs, but there are numerous methods now available for properly estimating emission data. The only issue is that most companies still rely on a combination of static models and more traditional statistical techniques, such as autoregressive integrated moving average (ARIMA) or nonlinear averages. While these methods provide a general approximation, they’re typically labor-intensive and overly time-consuming processes when done manually. Not to mention that these approaches frequently fail to account for variations, thereby resulting in inaccurate outcomes (especially when dealing with highly divergent or nonlinear data sets).

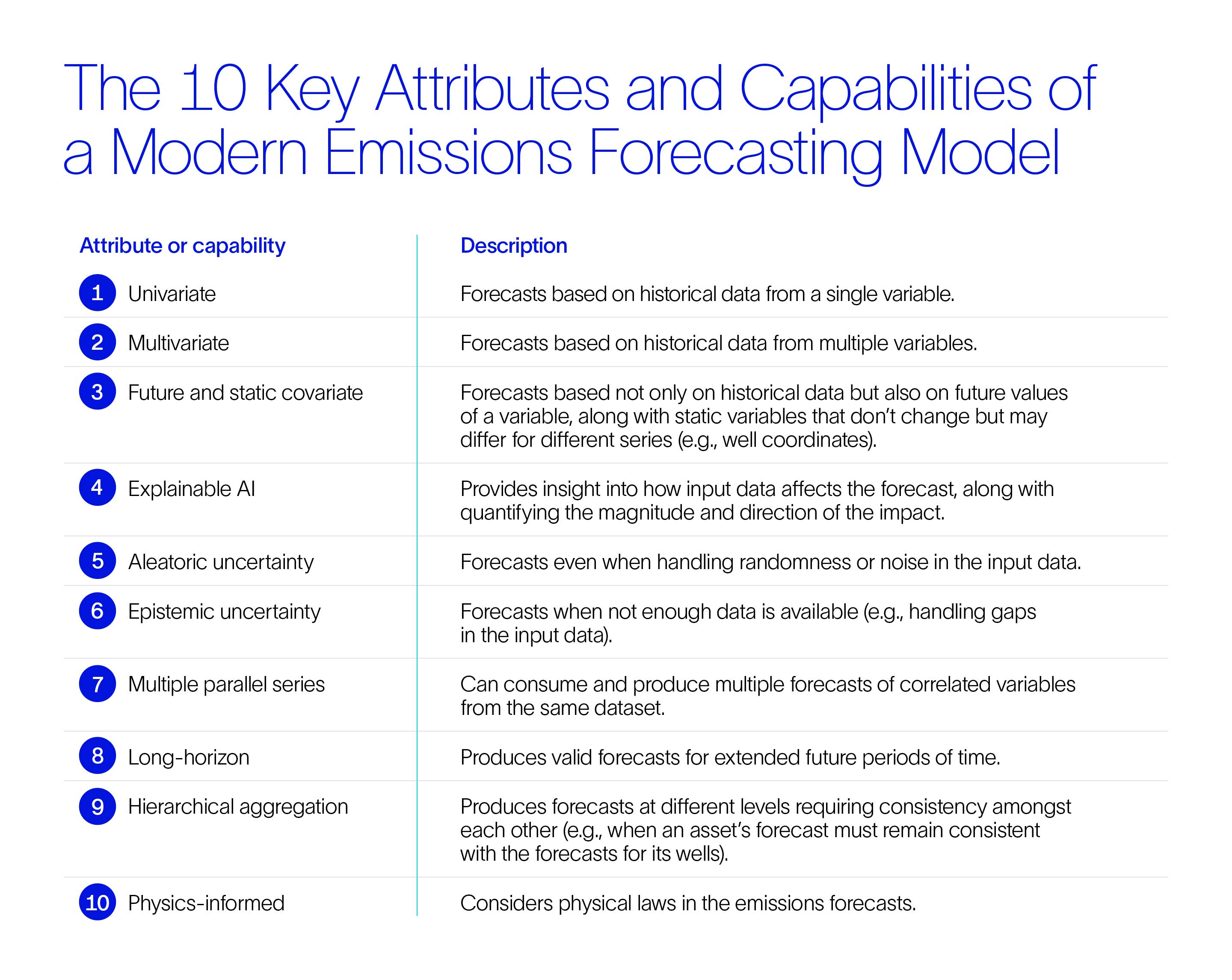

In fact, there are 10 key attributes or capabilities needed for an ideal modern emissions forecasting model. These can be layered in increasing levels of sophistication, as shown in the figure below.

It’s challenging to fulfill all of these requirements. Traditional forecasting methods, such as ARIMA and regression models, struggle with nonlinear patterns and fail to account for external influencing factors. More recent neural network-based models, such as transformer methods, can capture those nonlinearities—along with the dependencies and relationships in the data—through self-attention mechanisms. These models are, however, more costly to run and falter when it comes to data interpretability, given they cannot consider cause-and-effect relationships.

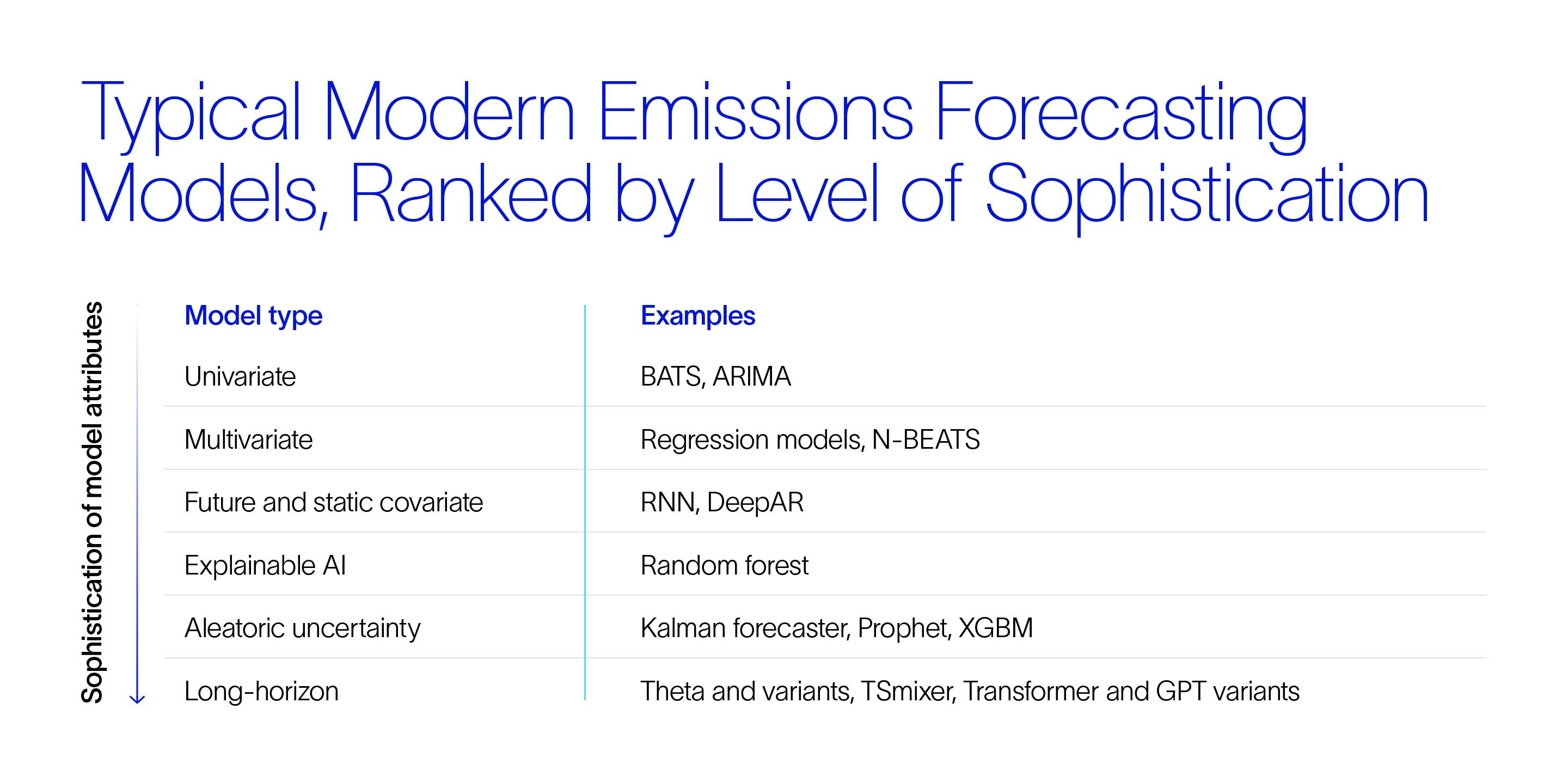

As you can see from the figure below, there are a variety of models available. While some of them (e.g., the random forest method and other tree-based models) can quantify only the magnitude of the impact made by new input data, post-hoc algorithms (e.g., SHapley Additive exPlanations, also known as SHAP) can be used to quantify both the magnitude and direction of the impact. It’s also worth noting that you can employ some of these base models (not all) to reach different levels of sophistication.

So, what does an ideal model look like? It’s a model that uses state-of-the-art AI methodologies to bridge the previously mentioned dichotomy, combining the best of both worlds to capture data nuances and interdependencies. A model that yields long-term forecasts in a computationally efficient manner by integrating deep learning with specific time-series forecasting principles, enriched with real-world physics and business rules. It’s an approach that enables the efficient production of long-term horizon forecasts for multiple parallel series, ensuring results are consistent with external dependencies and constraints (think hierarchical aggregations, physical models, and inputs on future expectations).

The forecast data generated by such a model is becoming essential for decarbonization scenarios, with companies using the input to create more accurate and realistic plans to meet their net-zero targets. Similarly, these decarbonization plans can also leverage AI to recommend the right initiatives, acting as a subject matter expert would, to help achieve the desired outcomes.

It’s clear that the AI revolution can do more than just help us make sense of the data we have today. We can also leverage it to understand the future to come—at least, that’s what forward-thinking organizations are doing (no pun intended).