Maximize, elevate, and scale data-driven decision making, with AI.

Six lessons learned from deploying real-world domain AI solutions

Published: 05/03/2025

Six lessons learned from deploying real-world domain AI solutions

Published: 05/03/2025

You have invested significant time learning python programming. You know the APIs of libraries like Pytorch, scikit-learn, pandas, numpy like the back of your hand. You have challenged yourself with Kaggle competitions, climbing up the leaderboard with each new submission. Armed with this knowledge and knowing that you can solve any AI challenge thrown your way, you enter your first real-world AI project.

But you are in for a major shock, there is a massive gap between expectation and reality. Rather than just dealing with code that doesn’t have emotions, you are dealing with a complex network of human stakeholders all of whom want the same goal—an AI project that delivers on its promise and generates value—but have wildly different views on how to get there. At this point, you wonder, ‘I wish there was someone I could talk to about all this’. If you find yourself in this situation, this is the article for you.

Building and deploying AI in geosciences is more than creating models; it’s a journey riddled with unique challenges. From refining vague problem statements to designing user-friendly interfaces and understanding the human factors that apply to each step of the machine learning (ML) process, success requires more than just technical skills. Here are a few key lessons I’ve learnt from real-world experiences intended to help someone who has taken up the challenge of managing or deploying a domain-AI project.

1. Recognize critical human factors that affect each step of the process

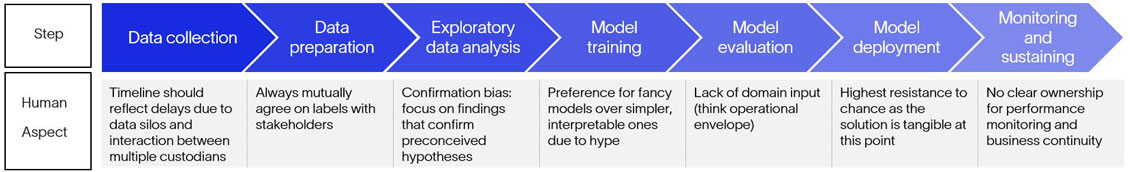

While most learning pathways focus on the technical aspects, there are very tangible human elements that can impact the timelines and probability of success for each step. Understanding these factors is key. Here is a generic flow for a machine learning task (an AI workflow involving large language models (LLMs) will differ at certain stages, but the concept remains the same).

As seen above, you will encounter unique challenges every step of the way. Anticipating them and planning proactively will set you up for success from the start.

2. Go beyond models: Think about end-users and engineering

End users: Having a model work well is just half of the aim. The other half is ensuring that people who interact with the tool or application find it easy, and if you’re lucky, a joy to work with. This is especially true of LLM-based applications where mouse-clicking has been replaced by typing text, often with the quality of prompts influencing the model’s output.

It’s unfortunate that the coolness of training a model or crafting a prompt often takes away the limelight from the user interface (during development), making it just an afterthought. However, understanding how end users will interact with the final product should guide every development decision. Will it be integrated into a mobile app, a desktop application, or a web tool? Will users need real-time predictions, or can they work with batch outputs?

One simple solution is to include a wireframing session either independently or as part of a design-thinking workshop conducted at the start of the project. A wireframe or a low-fidelity mockup with the workflows can help users visualize how they will interact with the final product. Addressing this is even more critical as the domain expert from the stakeholders who approves the project might not be a part of the end-user group.

Engineering: In practice, machine learning engineering turns out to be more about engineering than machine learning itself. There are many parts that must work together for your product to get released on time. Ensure that sufficient focus is placed on all the associated engineering—model deployment, the underlying infrastructure, provisions for scalability, model retraining, to name a few.

3. Make a dedicated effort to set the right expectations from the onset

Expectation management has always been a crucial aspect of project management, but it has become increasingly challenging with AI added to the mix. Nothing has done more to bring AI to the mainstream than the ability to have entire conversations with machines, powered by large language models. With this phenomenon has arrived a sky-high expectation of what AI can do. Naturally, this perception has also spilled over to key stakeholders in our industry. One of the main things we started doing after learning from previous projects was spending dedicated time to set realistic expectations of what AI can and cannot do. For this, you must actively invest time to cut through AI hype, expose its limits, risks, and misleading claims while offering a clear guide on when to trust AI in the context of your domain.

4. Describe project requirements comprehensively

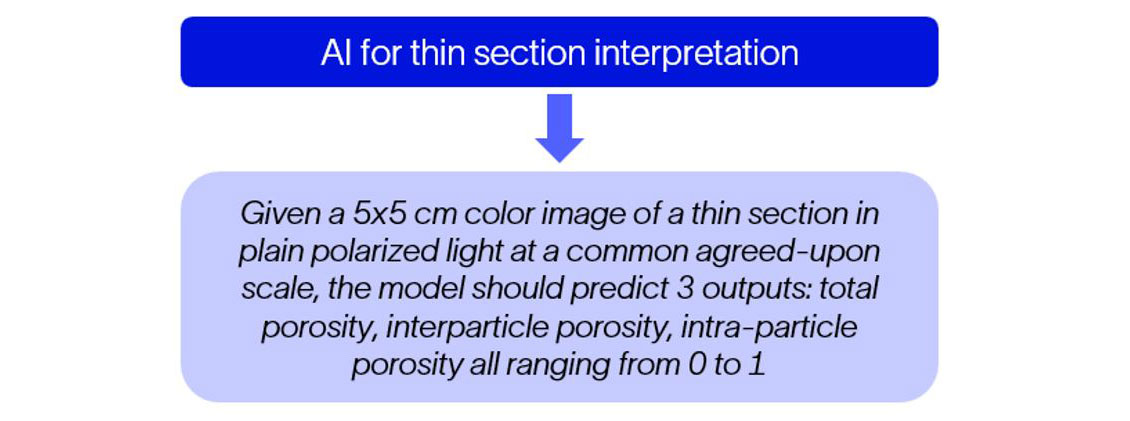

Many AI projects start with broad, ambiguous goals like “building a tool for seismic interpretation” or “creating an AI model for borehole image analysis.” While these statements are great, a key aspect of ensuring success of the AI project is to keep digging until one reaches a point where no more details can be added to the problem statement.

For instance, does seismic interpretation refer to just detecting fractures and faults, or identifying their orientation too? Without clarity, efforts risk being misaligned with user needs.

To avoid this, take the time to dig deeper. When you are leading a project as an expert, ask as many probing questions as possible to progressively add more detail to the problem statement. Your background as an AI expert enables you to ask pertinent questions that the stakeholders might not even consider important. Eventually, you should get to a point where your problem statement is something as granular as:

"Given a 5x5 cm color image of a thin section in plain polarized light at a common agreed-upon scale, the model should predict three outputs: total porosity, interparticle porosity, intra-particle porosity all ranging from 0 to 1."

Going from ‘AI for thin section interpretation’ to the above statement is probably the best step you can take to avoid trouble later. Clear definitions not only ensure alignment but also make it easier to measure success and communicate results.

Additionally, framing the problem at this level of detail allows users to picture the end goal better which triggers additional questions and helps de-risk the project and make better estimations. For example: inspired by a real-world scenario, such a statement tends to raise questions or concerns such as:

- “Some of our images might not be of the same size” (impact: factor into pre-processing estimates).

- "Some of our images are not stained with blue dye” (impact: factor time into image labeling).

- "We require a customized software to open certain thin section file formats” (impact: factor into data loading timeline).

5. Bridge the domain and data science gap

Solving a problem in geosciences using AI requires interdisciplinary expertise. Relying only on AI expertise to solve a problem is risky because there is room to misinterpret data without having the right domain expertise. One technique is to build a team comprised of data scientists and domain experts so that they can complement each other’s knowledge gaps. Another strategy that has a demonstrated history of working well is onboarding a "domain data scientist"—someone fluent in both geoscience and AI—to bridge the gap between domain-specific requirements and technical implementation.

Domain data scientists can analyze complex datasets and use their petrotechnical expertise to extract valuable insights before proposing innovative solutions. To put it lightly, think of them as domain LLMs fine-tuned on an AI/ML corpus. Additionally, when defending the solution in front of a tough technical review committee, it helps to be able to speak both languages.

6. Effective communication: Focus on what the stakeholders care about

Having stakeholders sign off on project completion often boils down to a single successful presentation. This meeting can go by different names: discipline committee review, milestone meeting, steering committee review, etc.

Regardless of the name, this meeting will consist of a mix of domain experts, project managers, and if you’re lucky, AI/ML experts. With limited time to convey the value of the work done, it’s imperative to express results in a way that makes sense to them and highlight the elements they care about.

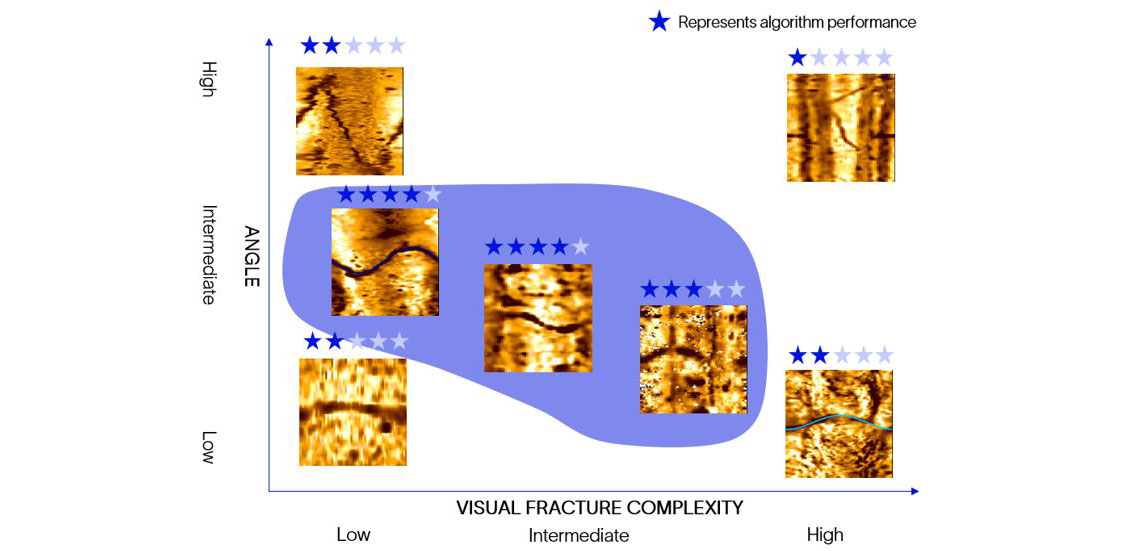

For example, in a recent project to automate picking dips from borehole images, we were asked to describe what the customer referred to as an operating envelope—i.e. where and how well the model would work across a range of subsurface conditions. Hence, for this image interpretation project, we created a unique 2D chart rather than providing a dense report. We plotted visual fracture complexity (how easy or hard it is for a human to visually delineate fractures in each image) on the x-axis, dip on the y-axis, and assigned star ratings for model accuracy.

This intuitive visualization immediately communicated where the model excelled and where it struggled, enabling them to determine where best to use the tool, all while avoiding text-based descriptions which would have been much harder to cover in a tight meeting. Similarly, think about creating bespoke visualizations or product demonstrations that focus on what matters most to your audience.

Overall, deploying AI-based solutions to tackle hard challenges in geosciences is a complex yet rewarding process. However, by being acutely aware of the human aspect, especially in a strongly polarized, hype-driven AI world, you can ward off some of the horsemen of domain AI project failure.

Rasesh Saraiya

AI and Innovation Factori team lead

Rasesh Saraiya leads the AI/INNOVATION FACTORI team for SLB Qatar, driving innovation at the intersection of geoscience and technology. In his previous role as Domain Data Scientist Program Manager at SLB HR Headquarters, he was responsible for an industry-first initiative to train domain experts as data scientists. With a deep passion for geoscience and AI, Rasesh has gained broad experience living and working in India, Nigeria, Saudi Arabia, and Qatar during his eight years at SLB.